Caching in ANKHOR FlowSheet

Caching is a mechanism that is used in many areas in computing. A hardware cache keeps data closer to the processor core, avoiding the memory latency. A disk cache avoids expensive read or write operations to disk by maintaining copies of data in main memory or combining smaller writes into larger chunks. A program may cache pre-computed data to avoid repeated evaluation of the same function.

Caching in ANKHOR is done in four different ways:

- Implicit caching, where the user does not specify nor notice that data is to be cached

- Macro level caching, a macro is requested to cache its results

- Explicit caching using cache operators

- Sheet level caching when using the ANKHOR HTTP server

The purpose of caching is to improve performance in the most frequent case without significantly delaying the rare cases. There is always a cost to caching in memory and CPU consumption, so it is not always beneficial to use it.

Implicit Caching

Implicit caching happens in many areas inside ANKHOR. It goes unnoticed but improves many common operations. I will list here some of the caches to give a rough view on what is cached:

- Table Heads: Whenever a new table row is created there needs to be a table head that keeps the names and types of the columns. Creating many similar rows in e.g. a loop will result in the same table head. This is implemented using a cache of tables heads that is queried, whenever a new head is needed.

- Formats: Conditional formatting of table cells using the “buildformat” operator will generate many identical format values. To avoid creating the same format again and again format values are cached for reuse. A similar cache is employed for formatted values.

- Strings: It is quite common that the same string is used many times in a flow sheet as e.g. a table cell value. Strings have a non-fixed size, so they cannot be kept in the fixed size of a table cell, but have to be allocated on the heap. The string cache reduces the number of string values that have to be allocated and destroyed. The same is done for short bit sequences.

- Public Values of Objects: Objects have a private and a public state. The public state may be evaluated on request by putting an operator into the public cell of an object. To avoid re-evaluation of the public value whenever the user inspects it, they are cached for a short time period.

- Row Dictionaries: There are no explicit index columns in ANKHOR tables, yet it is important to perform many fast searches on some of the columns. ANKHOR creates index hashes on demand and caches them for re-use.

- SQL Connections: Opening and closing SQL connections is expensive, therefore ANKHOR caches open connections for a while.

- Activation Frames: Each graph that is executed needs an activation frame to store the intermediate results of the connections. A graph that is marked as “compressed” may discard its frame, once it is completed. A loop operator will result in many similar activation frames allocated and discarded during execution. This churn is reduced by a cache of activation frames that allows fast recycle of frames without the need to go through the memory allocator.

The memory allocator itself uses various levels of caching to speed up allocating and releasing of common memory chunk sizes.

Macro Operator Caching

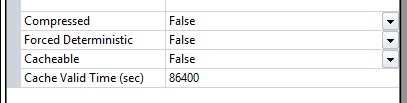

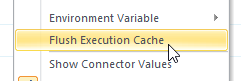

The graph of a macro can be set to cacheable using the “Cacheable” setting in the Property Editor pane. A cacheable operator caches the results of all executions for a given time.

The cached results will be used when a matching execution of the same macro is encountered. This can be either caused by a loop, recursive execution or when the macro is part of a library operator (aka operator class).

Classic use cases for cacheable macros are e.g. file read macros that would take a long time, functions that are expensive to evaluate or accesses to database records. A cache lookup is not for free, a unique hash value has to be calculated for the input values. Caching of operators that expect large input values is thus less advisable. The unique hash for large tables is cached as well, so if a small set of tables is fed into the cached macro this cost is avoided.

Some good examples from the ANKHOR libraries for operators that cache expensive functions are:

- A word stemmer that reduces words to their basic form. Stemming is performed by a sequence of string operations thus quite expensive to perform for a huge number of strings. The input keys are short strings words so they are simple to hash and the cache hit rate is usually high.

- Parsers and Graph builders for SQL, time series or graph DB execution. The same sub operations are frequently re-executed and re-building SQL statements or operator graphs is more expensive than building the hash from tag lists.

- The polygon builder code for map data. When scrolling through maps, the same feature is re-drawn for every frame.

- The force based graph layouter is caching the placement of nodes. The placement does not depend on formatting such as colours or the content of the nodes or highlight of edges. It can thus be re-used when only those features of the graph change.

Caching can also be used to reduce memory consumption. An operator that computes large results in a loop e.g. table rows or strings that are frequently similar can unify these results by tunnelling the individual results through an empty macro operator with enabled caching. The computed result will then be replaced by the cached result in case of a hit.

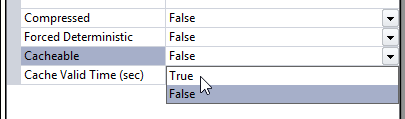

A classic use case for caching in recursion is the Fibonacci series Fn=Fn-1+Fn-2.

Evaluating the series in this recursive way has exponential runtime although only a limited number of distinct function values is evaluated. Setting the internal macro to be cacheable avoids this exponential runtime and results in a linear time. The F35 without caching takes 11 seconds on my machine, the version with caching is done in less than 1 millisecond.

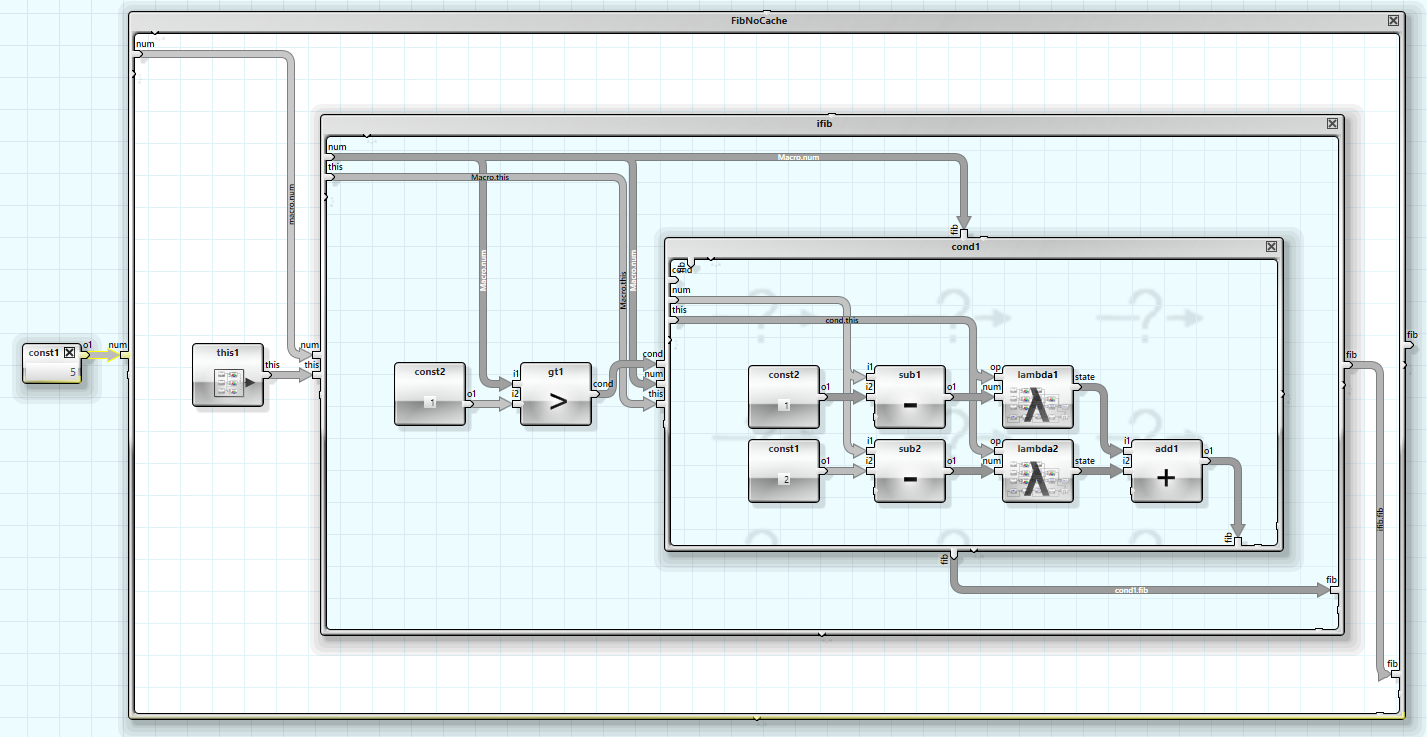

The key of the macro cache is based on the input values and the structure of the macros, so if the macro changes the cache will be invalidated. If the macro on the other hand accesses external data, such as files or database entries, it might become stale without ANKHOR knowing. The value cache can thus be manually flushed using the context menu.

Explicit Caching using Operators

Caching using macros is simple and easy to use, but has some drawbacks that can be avoided using the explicit caching operators.

- Caching using macros is not persistent. The cache result is flushed when the FlowSheet is closed and has to be rebuilt the next time it is loaded. The explicit operators allow persistent caching to disk.

- The macro cache cannot be flushed from within the FlowSheet, thus it should not be used for data in an external storage that may change due to execution of the sheet.

- The cache key of a cached macro is calculated using an MD5 hash of the input data and the operator structure. This can be more expensive than necessary if a simple unique key is known for the data. The explicit operators allow any type of key.

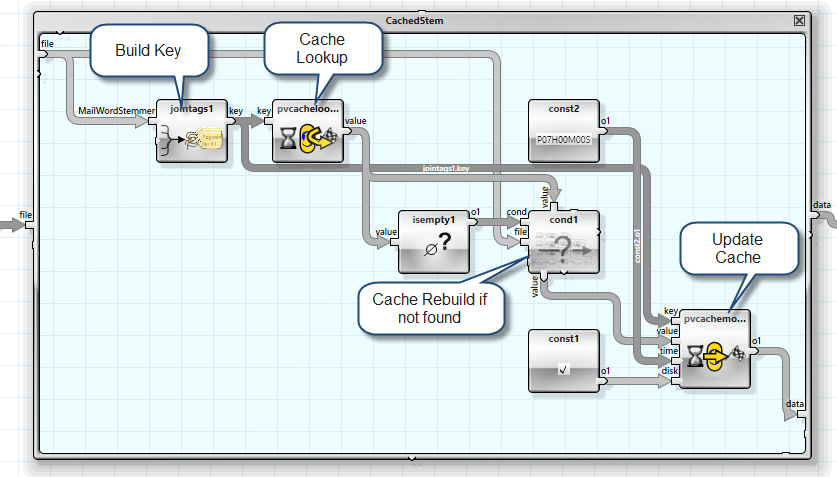

Two operators are used in combination to implement the explicit caching. The “pvcachelookup” operator searches the in-memory and persistent cache using an explicit key as input. It returns the empty value if no match is found. The “pvcachemodify” operator is used to place a new value in the cache, flush the cache or bump the cache valid time. It receives the cache key as well as the key value and control parameters.

The standard use of these operators is thus:

- Building the key using a unique value and a tag key that represent the type of cache

- Looking up the cache and checking for a miss using the “isempty” operator

- A conditional operator that rebuilds the cache value in case of a miss

- Storing or refreshing the cache value with the new or old value and the key

The cache entry can be flushed by putting the empty value into the value input of the “pvcachemodify” operator.

Persistent cache values are be stored in a lazy manner to disk with the help of a background thread.

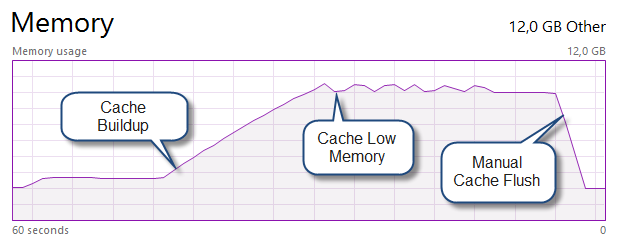

Low Memory Situations

The ANKHOR execution engine continuously monitors the amount of free memory and starts to flush cache entries when memory is low.

The flushing order is based on an LRU algorithm, flushing entries that have not been accessed for a longer period first.

There is also a background trimming thread that continuously monitors the various caches and flushes entries that are stale or have not been used for a longer time. This thread runs more aggressive if the FlowSheet is idle for a longer time, thus reducing system memory pressure.

Caching the Data provided by the ANKHOR HTTP Server

The ANKHOR HTTP server caches the results of FlowSheets to avoid re-evaluation if the flow sheet is deterministic. A FlowSheet is considered deterministic if it is free of non-deterministic operators such as random operators or external data sources. An operator graph that uses non-deterministic operators can be forced to be considered deterministic using an entry in the graph's property pane.

Other cached elements are generated images, such as colour flows or diagrams. All images that are delivered are put in a cache using their MD5 checksum. This also results in unique URIs for the same images.

Download FlowSheet and sample data (ZIP archive)